Blog moved

Blog moved here: http://blog.andialbrecht.de.

Sorry for the inconvenience, but now I can use ReStructured Text to write posts :)

hgsvn 0.1.8 released

A new maintenance release of hgsvn is available on PyPI. Go and grab it while it’s hot :)

Besides a lot of bug fixes this release includes a few new features and improvements:

- Matt Fowles added the so-called “airplane mode”, when using hgimportsvn with the –local-only flag no network access is required to convert a already checked out Subversion repository to a hgsvn controlled Mercurial repository.

- Commit messages when pushing back to SVN can be edited before committing using the -e/–edit command line flag. Patch by eliterr.

- sterin provided a patch that takes care that no mq patches are applied when running hgpushsvn or hgpullsvn.

Thanks to all contributors for reporting bugs and providing patches!

Pluggable App Engine e-mail backends for Django

Yesterday support for pluggable e-mail backends has landed in Django’s trunk and today I’m happy to announce two e-mail backend implementations to be used with Django-based App Engine applications.

The e-mail backends allow you to use native Django functions for sending e-mails like django.core.mail.send_mail() on App Engine.

To use pluggable e-mail backends you’ll have to use a recent version of Django. Support for pluggable e-mail backends was introduced in rev11709 and will be released in Django 1.2.

Using the e-mail backend

The e-mail backends are available as a single package on bitbucket. To check out the most recent sources run:

$ hg clone http://bitbucket.org/andialbrecht/appengine_emailbackends/

or got to the downloads page and grab a zip file.

To use the e-mail backend for App Engine copy the appengine_emailbackend directory to the top-level directory of you App Engine application and add the following line to your settings.py:

EMAIL_BACKEND = 'appengine_emailbackend'

If you prefer asynchronous e-mail delivery use this line in your settings.py instead:

EMAIL_BACKEND = 'appengine_emailbackend.async'

When using the async backend e-mails will not be sent immediately but delivered via a taskqueue.

Running the demo application

There’s also a demo application included in this repository. Run this application using

$ dev_appserver.py .

and open http://localhost:8080 in your web browser. Remember to include a current checkout of the Django SVN repository in the top-level directory of the demo application.

Dominion Set Designer

If you’re a regular Dominion player like me, you’re always in search for new card sets. Fortunately I’ve had a little time this weekend to write a simple App Engine application that gives me a random card set on demand.

You can see it in action at http://dominionsets.appspot.com

You can see it in action at http://dominionsets.appspot.com

That’s it, just a short post on this, now back to the next match :)

P.s.: Some technical stuff… There’s Django with it’s i18n support under the hood. The UI is powered by JQuery.

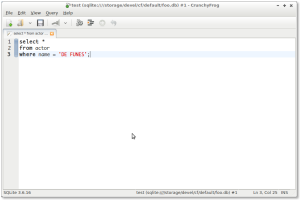

CrunchyFrog 0.4.1 released

A new bugfix release for CrunchyFrog is out in the wild. There are not much visible changes, but a few bug fixes under the hood. One visible change is the option to give the SQL editor much more space by hiding all other UI elements in the main window. Here’s a screenshot:

The second screenshot shows the new highlighting of errors in the editor. In this case there’s no table called “actor”. When the execution of a statement results in a SQL error, CrunchyFrog now tries to find the position of the error in the SQL editor, highlights the corresponding part in your SQL statement and moves the cursor to the right place. I found it very handy to have the cursor in place to correct the error and run the statement again without searching the error before.

If you’re interested in the other, non-visible changes, please read the full list of changes or just go straight to the project page and give it a try :)

Thanks to everyone who contributed to this release by sending patches and submitting issues on the tracker!

“Thank you, Mark”

The quote in the title is something that Dana Colley said after giving credits to the members of Orchestra Morphine on some show circulating through the internet about nine years ago. I think this show is still available on the HI-N-DRY website and I strongly recommend to buy it, it’s a fascinating recording.

I’ve choosen this quote because it expresses what I’m thinking today, the 10th anniversary of Mark Sandman’s passing. His music shaped me more than any other music I’m listening too. He had that idea of a unique sound, not to mention the great lyrics. It’s not easy to explain to someone who never listened to Morphine what this idea is about. It’s not even easy to explain it to people that had to hear quite a lot of Morphine the past ten (and more) years (many, many thanks to my family for their patience with my musical taste they call “a bit strange” from time to time :)… Read more…

Working on hgsvn

Yesterday I’ve taken over maintenance of hgsvn from it’s original author Antoine Pitrou. Many thanks to Antoine at this point for doing a great job on this tool!

In case you don’t know, hgsvn is “a set of scripts to work locally on Subversion checkouts using Mercurial.”

My aim is not to make these scripts a full-blown Subversion extensions for Mercurial. hgsubversion or the convert extension will probably do a much better job with tighter integration as it’s in the scope of hgsvn. So the main focus is on fixing bugs and to finish and release the hgpushsvn script that commits changes in your Mercurial repository back to Subversion.

The reason why I decided to work on this project is pretty simple: I’ve made good experiences with it and I still want to keep using it.

With hgpushsvn the tool provides three scripts for the most basic tasks:

hgimportsvn initializes a hg repository and fetches the sources from svn, optionally starting at a specific revision.

hgpullsvn pulls new change sets from svn to your local hg repository.

hgpushsvn pushes back your local commits to the svn repository.

Using hgsvn for a while now, I’m feeling very comfortable with these scripts and they fit very well into my workflow.

The next things I’m about to do are:

- fix the unittests, at least one is broken ATM

- setup a buildbot to have some automated tests

- finish hgpullsvn

If you’re using hgsvn, please file bug reports or submit patches on the issue tracker.

How Do You Look When Merging Fails ;-)

There was a Simpsons episode, I can’t recall correctly, but I think Bart recorded Lisa when her heart broke and he watched it in slow motion to stop exactly at that point.

I thought of this episode yesterday while playing around with my laptop’s webcam and a Python shell. Finally I wrote a little fun script that does almost the same: Just register it as a hg hook and it takes a picture of you exactly at the unique moment when merging fails and it sends it directly and without any further questions to Twitpic and Twitter:

#!/usr/bin/env python

import os

import sys

import tempfile

import time

from CVtypes import cv

from twitpic import TwitPicAPI

DEVICE = 0

TWITTER_USER = 'xxx' # CHANGE THIS!

TWITTER_PWD = 'xxx' # CHANGE THIS!

# This is the time in seconds you need to realize that the merge has

# failed. When setting this consider that it already takes about a second

# for the camera to take the picture. "0" means no delay ;-)

EMOTIONAL_SLUGGISHNESS_RATE = 0.0

def grab_image(fname):

camera = cv.CreateCameraCapture(DEVICE)

frame = cv.QueryFrame(camera)

cv.SaveImage(fname, frame)

def how_do_you_look():

failed = bool(os.environ.get('HG_ERROR', 0))

if not failed:

return # hmpf, maybe next time...

fd, fname = tempfile.mkstemp('.jpg')

if EMOTIONAL_SLUGGISHNESS_RATE > 0:

time.sleep(EMOTIONAL_SLUGGISHNESS_RATE)

grab_image(fname)

twit = TwitPicAPI(TWITTER_USER, TWITTER_PWD)

retcode = twit.upload(fname, post_to_twitter=True,

message='Another merge failed.')

os.remove(fname)

if __name__ == '__main__':

how_do_you_look()

You’ll need the CVtypes OpenCV wrapper and this Twitpic Python module. I’ve patched the twitpic module to support messages. Have a look at this issue if it’s already supported, otherwise a diff that adds the message keyword is attached to the issue. To use it as a Mercurial hook just add to .hg/hgrc:

[hooks] update = /path/to/the/above/script.py

and make the script executable.

The results are pretty good :)

Have fun!

BTW, the way how to access the camera is inspired by this nice blog post about face recognition using OpenCV.

Edit (2009-05-12): It was Ralph, not Lisa. Thanks Florian!

App Engine Tracebacks via E-Mail

Edit 2009-09-04: Starting with App Engine SDK 1.2.5 released today there’s now a much smarter approach. See the docstring of the ereporter module for details!

Django’s error reporting via e-mail is something I’ve missed for my App Engine applications. If you’ve followed the instructions on how to use Django on App Engine errors are already logged. But honestly, I don’t check the logs very often and sometimes it’s just a silly typo that’s fixed in a minute ;-)

So if you’ve followed the instructions you can simply extend the log_exception function to send an email after the exception is logged:

def log_exception(*args, **kwds):

"""Django signal handler to log an exception."""

excinfo = sys.exc_info()

cls, err = excinfo[:2]

subject = 'Exception in request: %s: %s' % (cls.__name__, err)

logging.exception(subject)

# Send an email to the admins

if 'request' in kwds:

try:

repr_request = repr(kwds['request'])

except:

repr_request = 'Request repr() not available.'

else:

repr_request = 'Request not available.'

msg = ('Application: %s\nVersion: %s\n\n%s\n\n%s'

% (os.getenv('APPLICATION_ID'), os.getenv('CURRENT_VERSION_ID'),

''.join(traceback.format_exception(*excinfo)),

repr_request))

subject = '[%s] %s' % (os.getenv('APPLICATION_ID'), subject)

mail.send_mail_to_admins('APP_ADMIN@EXAMPLE.COM',

subject,

msg)

Make sure to replace “APP_ADMIN@EXAMPLE.COM” with the email address of an admin for this application and to add these two imports somewhere at the top of your main.py:

import traceback from google.appengine.api import mail

You don’t need to comment this when developing your app to avoid lots of useless mails. The dev server doesn’t fully implement send_mail_to_admins() most likely as there’s no real concept of an designated admin user in the SDK. It just writes a short message to the log, even if the sendmail option is enabled.

P.s.: If you use webapp instead of Django have a look at the seventh slide of this presentation by Ken Ashcraft. He shows a similar implementation for webapp based applications.

SQL Parsing with Python, Pt. II

After I’ve described some basics in part I let’s have a closer look to the actual Python module called sqlparse. First off, have a look at the project page on how to download and install this module in case you’re interested. But now, let’s have some fun…

The API of the module is pretty simple. It provides three top-level functions on module level: sqlparse.split(sql) splits sql into separate statements, sqlparse.parse(sql) parses sql and returns a tree-like structure and sqlparse.format(sql, **kwds) returns a beautified version of sql according to kwds.

As mentioned in my previous post, what effort needs to be done to build the return values depends on what lexing and parsing work is needed to find the result. For example sqlparse.split() does the following:

- Generate a token-type/value stream basically with a copy of Pygments lexer

- Apply a filter (in terms of a Pygments stream filter) to find statements

- Serialize the token stream back to unicode

sqlparse.parse() does all the grouping work and sqlparse.format() runs through those groups, modifies them according to the given formatting rules and finally converts it back to unicode.

Here’s an example session in a Python shell:

>>> import sqlparse >>> # Splitting statements: >>> sql = 'select * from foo; select * from bar;' >>> sqlparse.split(sql) <> # Formatting statemtents: >>> sql = 'select * from foo where id in (select id from bar);' >>> print sqlparse.format(sql, reindent=True, keyword_case='upper') SELECT * FROM foo WHERE id IN (SELECT id FROM bar); >>> # Parsing >>> sql = 'select * from "someschema"."mytable" where id = 1' >>> res = sqlparse.parse(sql) >>> res <<>> stmt = res[0] >>> stmt.to_unicode() # converting it back to unicode <<<u>>> # This is how the internal representation looks like: >>> stmt.tokens <<>>

Now, how does the grouping work? Grouping is done with a set of simple functions. Each function searches for a simple pattern and if it finds one a new group is built. Let’s have a look at the function that finds the WHERE clauses.

def group_where(tlist):

[group_where(sgroup) for sgroup in tlist.get_sublists()

if not isinstance(sgroup, Where)]

idx = 0

token = tlist.token_next_match(idx, T.Keyword, 'WHERE')

stopwords = ('ORDER', 'GROUP', 'LIMIT', 'UNION')

while token:

tidx = tlist.token_index(token)

end = tlist.token_next_match(tidx+1, T.Keyword, stopwords)

if end is None: # WHERE is at the end of the statement

end = tlist.tokens[-1]

else:

end = tlist.tokens[tlist.token_index(end)-1]

group = tlist.group_tokens(Where, tlist.tokens_between(token, end))

idx = tlist.token_index(group)

token = tlist.token_next_match(idx, T.Keyword, 'WHERE')

tlist is a list of tokens, possible subgroups are handled first (bottom-up approach). Then it grabs the first occuring “WHERE” and looks for the next matching stop word. I’m pretty unsure if the stop words approach is right here, but at least it works for now… If it finds a stop word, a group using the class Where is created, the tokens between WHERE and the stop word are attached to it and – still within the while loop – the next WHERE keyword is used as the next starting point.

So why not use a grammar here? At first, this piece of code is pretty simple and easy to maintain. But it can also handle grammatically incorrect statements more lazily, e.g. it’s no problem to add an if clause that – when for example an unexpected token occurs – the function just jumps to the next occurance of WHERE without changing anything or even raising an exception. To achieve this the token classes provide helper functions to inspect the surroundings of an occurrence (in fact, just simple list operations). There’s no limitation what a grouping function can do with the given token list, so you could even “guess” a proper group with some nasty algorithm.

The current problem with this approach is performance. Here are some numbers:

| Size | split() | parse() |

|---|---|---|

| 100kb (20600 tokens) | 0.3 secs. | 1.8 secs. |

| 1.9MB (412500 tokens) | 5.53 secs. | 37 secs. |

Most of the performance is lost when giving up the stream-oriented approach in the parsing phase. The numbers are based on the first unrevised working version. I expect performance improvents especially in the way how token lists are handled behind the scenes with upcoming versions. For real life statements the parser behaves quite well. BTW, the Pygments lexer takes about 6 seconds (compared to 5.5 secs. for splitting) for the 1.9MB of SQL.

The non-validating approach is a disadvantage too. You’ll never know if a statement is valid. You can even parse middle high german phrases and receive a result:

>>> sqlparse.parse('swer an rehte güete wendet sîn gemüete')[0].tokens

<<>>

It’s up to the user of this module to provide suitable input and to interpret the output. Furthermore the parser only supports not every nifty edge of an SQL dialect. Currently it’s mostly ANSI-SQL with some PostgreSQL specific stuff. But it should be easy to implement further grouping functions to provide more SQL varieties.

The splitting feature is currently used by CrunchyFrog and does a pretty good job there. I assume that SQL splitting works stable and reliable in most cases. Beautifiying and parsing is very new in the module and full functionality needs to be proven with time. Luckily the top-level API with it’s three functions is damn simple and keeps the doors open for significant changes behind the scenes if they’re needed.

The sources of the sqlparse module are currently hosted on github.com but may move to Google Code anytime soon. Refer to the project page on Google Code for downloads and how to access the sources.

In addition there’s a simple AppEngine application that exposes the formatting features as an online service.

SQL Parsing with Python, Pt. I

Some time ago I was in search for some kind of SQL parser module for Python. As you can guess, my search wasn’t really successfull. pyparsing needs a grammar, but I’m not really interested in writing a full-blown grammar for SQL with it’s various dialects. AFAICT Gadfly implements a customized SQL parser, but I was not able to figure out how to use it. And there’s sqlliterals, but as the name suggests it’s just for identifying literals within statements.

I expect such a module to do the following:

- It should be fast ;-)

- scalable – what the parser needs to know about a string containing SQL statements depends on what I want to do with it. If I just want to split that string in separate statements I don’t need to know as much as when I want to know what identifiers occur in it.

- non-validating – Parsing shouldn’t fail if the statement is syntactically incorrect as I want to use it for an SQL editor where the statements are (naturally) incorrect most of the time.

- some beautifying is a nice-to-have – We all know SQL generated by a script and copied from a logging output to a database front-end – it always looks ugly and is pretty unreadable.

The only thing I’ve found that comes close to my needs is Pygments (yes, the syntax highlighter). It does a really good job highlighting SQL, so it “understands” at least something. To be concrete, it has a rock-solid and fast lexer. There’s a FAQ entry if it’s possible to use Pygments for progamming language processing. The answer is:

The Pygments lexing machinery is quite powerful can be used to build lexers for basically all languages. However, parsing them is not possible, though some lexers go some steps in this direction in order to e.g. highlight function names differently.

Also, error reporting is not the scope of Pygments. It focuses on correctly highlighting syntactically valid documents, not finding and compensating errors.

There’s a nice distinction between lexers and parser in this answer. The former does lexical and the latter syntactical analysis of a given stream of characters. For my needs this led to a two step process: first to do the lexing using Pygments mechanism (BTW, read “focuses on […] syntactically valid documents, not finding […] errors” as “non-validating”) and then to add parsing on top of the token stream.

So I stripped the lexing mechanism for SQL out of Pygments to get rid of some overhead not needed for my purposes (e.g. loading of extensions). The only changes to the Pygments tokenizer was to replace a huge regex for finding keywords by a dictionary-based lookup and to add a few new tokens to make live easier in the second processing stage. The achieved performance improvement by replacing the regex doesn’t play a significant role for Pygments, it just speeds up the lexing a bit.

In the second step the very efficient token-type/value stream generated in step 1 is grouped together in nested lists of simple classes. Instantiating a bunch of classes results in a performance loss, but with the benefit of some helpful methods for analyzing the now classified tokens. As the classes are pretty simple most of the performance loss was recaptured by using slots.

The grouping mechanism is again non-validating. It tries to find patterns like identifiers and their aliases or WHERE clauses and their conditions in the token stream, but it just leaves the tokens unchanged if it doesn’t find what it’s looking for. So it actually defines some sort of “grammar”, but very unrestrictive. That means that the quality of answers to questions like “Is there a WHERE clause?” or “Which tables are affected?” heavily depends on the syntactical clearness of the given statement at the time when it’s processed and it’s up to the user or application using the module to intrepet the answers right. As the module is seen as a helper during the develoment process of SQL statements it doesn’t insist on syntactical correctness as the targeted database system would do when the statement is executed.

All in all these are the two (simple) processing steps:

- Lexing a given string using regular expressions and generate a token-type/value stream (in fact, this is the Pygments lexer).

- Parsing

- Instantiate a simple token class for each item in the stream to ease syntactical analysis.

- Group those tokens in nested lists by using simple functions by finding patterns.

Depending on what should be done with the statements, only the first step is required. For example, splitting statements or simple formatting rules don’t require parsing.

Pt. II of this blog post will cover the two remaining points: scalibality and the beautifiying goodie. And it will give a closer look at the module I came up with, including some performance stats. For the impatient: the source code of this module is available here.